Highlight 1

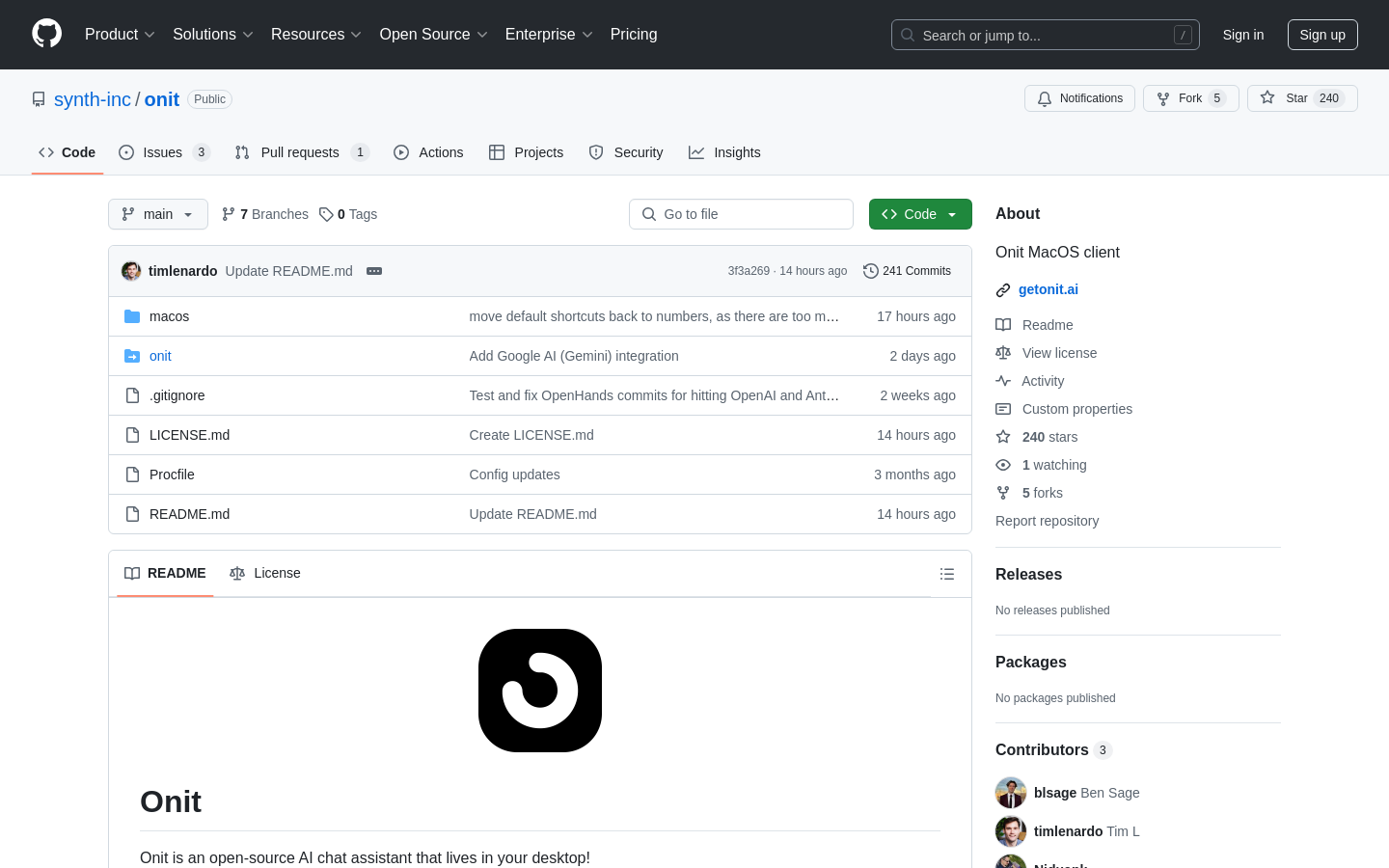

Onit allows users to interact with a variety of AI models, both locally and remotely, offering flexibility and freedom of choice.

Highlight 2

The local mode feature is excellent for privacy-conscious users, as it allows AI processing to occur on their own machine without the need to upload personal data.

Highlight 3

The app’s open-source nature is a significant advantage, encouraging customization and extension by the community, which enhances its potential for growth and adaptability.

Improvement 1

The application currently only supports macOS, so extending support to Windows and Linux would broaden its user base.

Improvement 2

The setup process for local models (via Ollama) may be a bit complicated for non-technical users. Providing clearer instructions or an automated setup process could improve user experience.

Improvement 3

While the app allows file uploads, it could benefit from better integration with a wider range of file formats and enhanced support for large files.

Product Functionality

Consider adding more model providers and expanding support for offline functionality, such as local RAG (retrieval-augmented generation) and autocontext features.

UI & UX

The user interface could benefit from a more streamlined onboarding process to make it easier for new users to understand how to set up local models and switch between providers.

SEO or Marketing

To increase visibility, consider highlighting the open-source nature of Onit in marketing materials and offering more tutorial content or use cases to attract new users.

MultiLanguage Support

Implementing multi-language support would make Onit more accessible to a global audience, especially for non-English speaking users.

- 1

What AI models can I use with Onit?

Onit supports models from multiple providers, including OpenAI, Anthropic, xAI, and GoogleAI. You can also run models locally using Ollama.

- 2

Does Onit collect my data?

Onit minimizes data collection. It does not store prompts or responses, but it collects crash reports and a single 'chat sent' event for analytics purposes.

- 3

How do I use Onit's local mode?

To use Onit in local mode, you need to install Ollama. Onit will fetch the list of models available on your system through Ollama's API.