Highlight 1

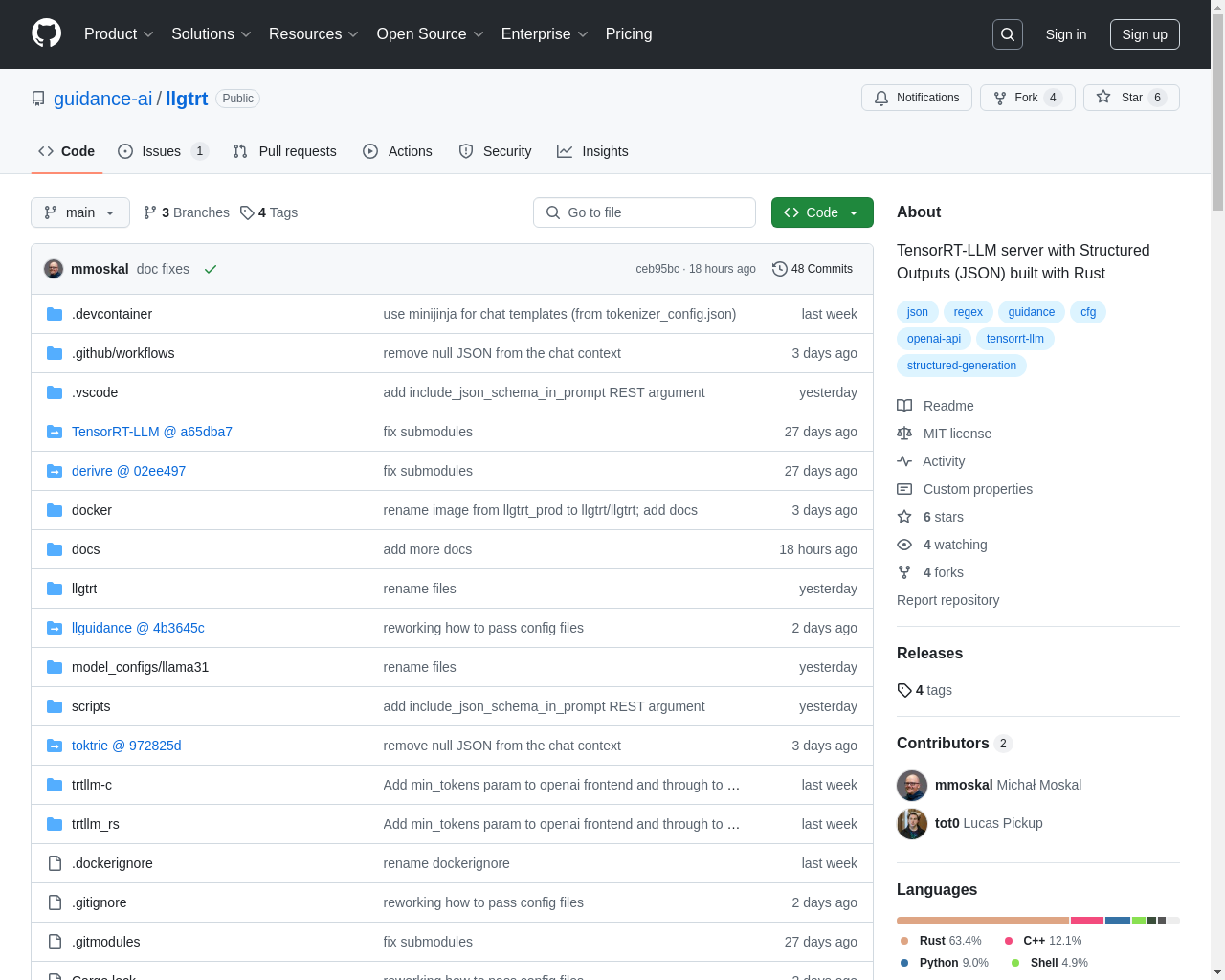

The use of Rust ensures high performance and efficient memory usage, which is particularly beneficial for intensive tasks like handling Large Language Models.

Highlight 2

This makes it easier for developers familiar with the OpenAI ecosystem to integrate and utilize this server seamlessly.

Highlight 3

The enforcement of structured JSON schemas and full context-free grammars provides users with a predictable and manageable output, improving application reliability.

Improvement 1

While the project is promising, comprehensive documentation could improve usability for new users and assist in quicker onboarding.

Improvement 2

Increasing developer engagement through forums or issues tracking can foster a supportive community around llgtrt, which can lead to faster beta testing and feature requests.

Improvement 3

Although it’s primarily a server, any potential web interface for monitoring or configuring the server could enhance user experience and accessibility.

Product Functionality

Integrate more detailed logging and monitoring tools to enhance visibility into server performance and request handling.

UI & UX

Improve the documentation site by incorporating a clean, navigable interface with example use cases and tutorials to better guide new users.

SEO or Marketing

Implement SEO strategies by creating content around use cases and tutorials, which can help improve visibility and attract new developers interested in server hosting for language models.

MultiLanguage Support

Consider adding multilingual support in the documentation to cater to a broader audience and engage non-English speaking developers.

- 1

What programming languages does llgtrt support?

llgtrt primarily supports Rust but also interfaces seamlessly with C and Python through the llguidance library for applying sampling constraints.

- 2

Can I deploy llgtrt in a production environment?

Yes, llgtrt is designed for high-performance usage, making it suitable for production environments where large language model inferencing is required.

- 3

How does llgtrt ensure efficient output generation?

Utilizing Ninja's TensorRT-LLM capabilities, llgtrt minimizes generation overhead and eliminates startup costs, enabling faster response times for users.